Every major platform upgrade has a moment where a supporting feature quietly becomes a foundation.

For DCH 3.0, that moment is Workflows.

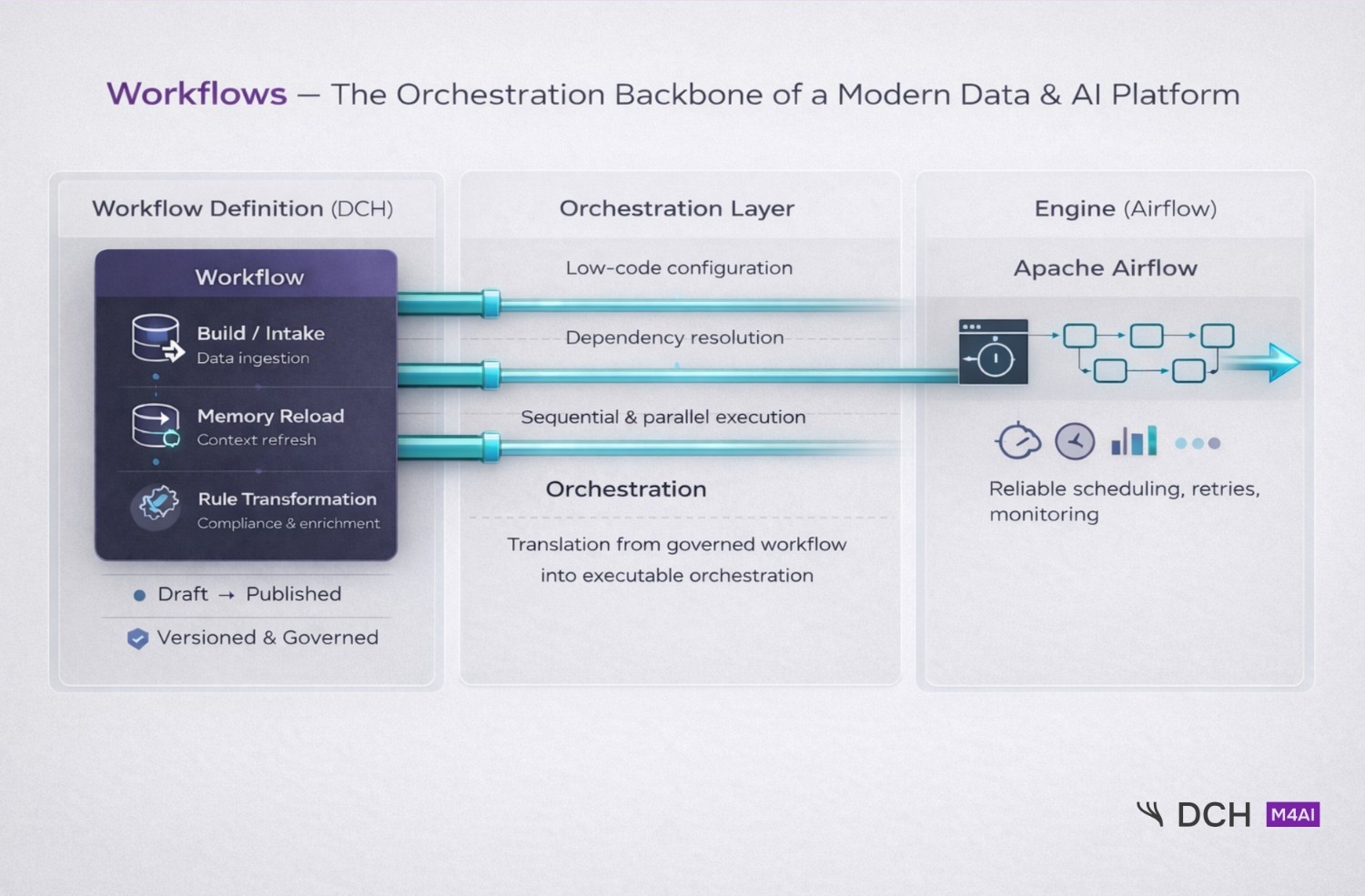

What used to exist as Load Plans has now evolved into a first-class execution backbone designed not just to move data, but to orchestrate real enterprise processes safely, repeatably, and at scale.

This shift is not cosmetic. It reflects a deeper change in how C64 approaches automation, governance, and execution across DCH and M4AI.

From Load Plans to Workflows

Load Plans were originally created to solve a narrow problem: triggering structured ingestion jobs in a defined order.

Workflows solve a broader one.

They provide a versioned orchestration layer that can define, test, publish, and operate complex execution logic without turning the platform into a brittle collection of scripts or ad-hoc pipelines.

Designed for Safe Change: Draft → Published Versioning

One of the most important changes in DCH 3.0 is how execution logic evolves over time.

Every workflow now supports explicit version states:

- Draft – for building and testing safely

- Published – the active production version

- Archived – preserved history, no longer active

At any point:

- There is at most one draft and one published version.

- Publishing a new version automatically archives the previous one.

This mirrors how real organizations operate. Execution logic changes, but production stability must never depend on experimentation.

With workflows, testing and execution are deliberately separated.

Tasks That Reflect Real Processes

Workflows are composed of tasks, each representing a concrete step in an execution chain.

Tasks can:

- Run sequentially

- Execute in parallel

- Depend on conditions and prior outcomes

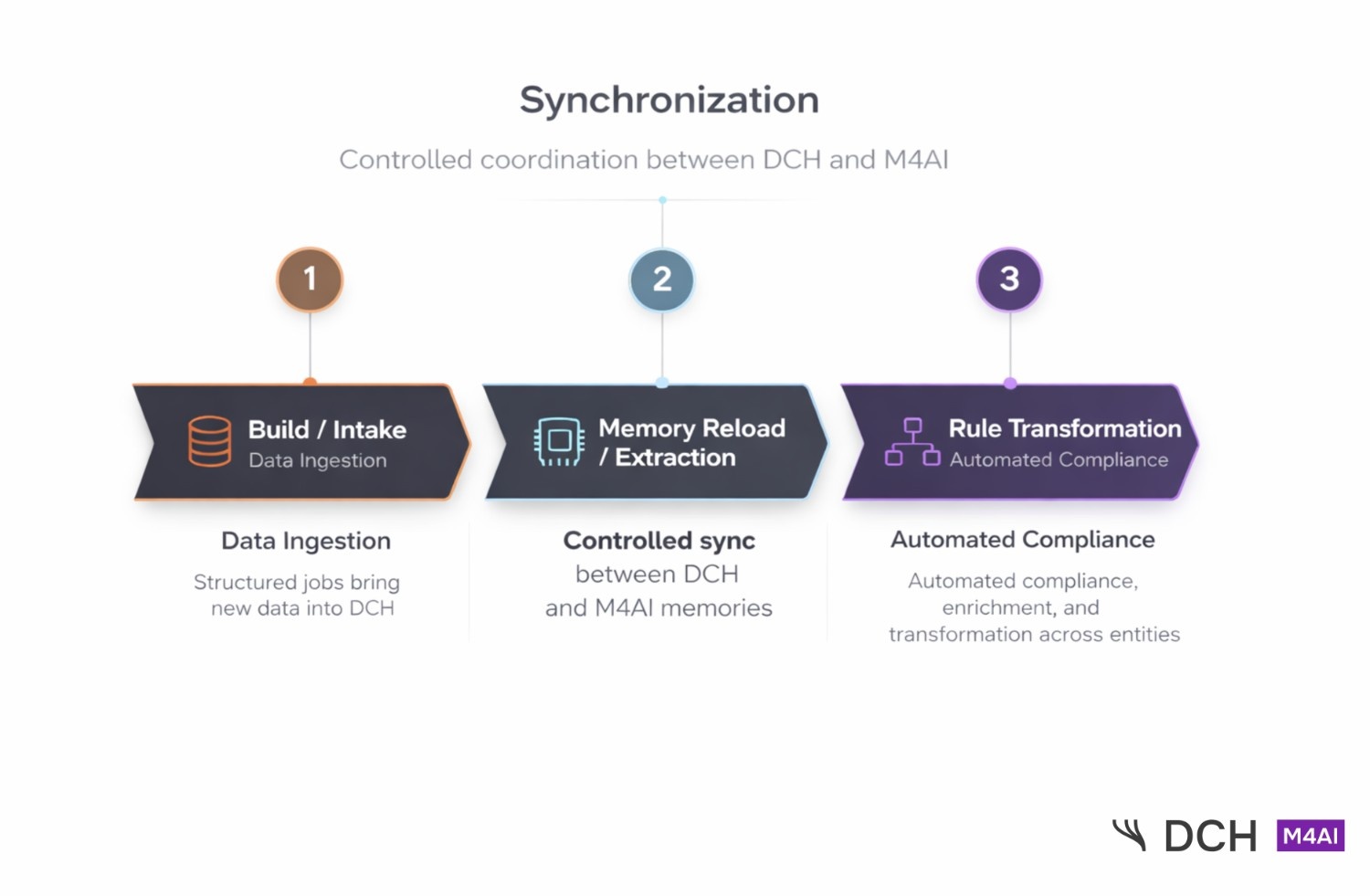

The initial task types are deliberately focused on core platform flows:

Build / Intake : Structured ingestion jobs that bring new data into DCH.

Memory Reload / Extraction : Controlled synchronization between DCH and M4AI memories.

Rule Transformation : Automated compliance, enrichment, and structural transformation across entities and relationships.

Each task is defined declaratively what should happen, under what conditions not how it should be hardcoded.

This keeps workflows understandable for humans and reliable for systems.

Industrial-Grade Execution with Apache Airflow

While DCH defines and governs workflows, execution itself is handled by a proven engine: Apache Airflow 3.1.

This separation is intentional.

When a workflow is published:

- DCH automatically generates a corresponding DAG.

- That DAG is uploaded to Airflow.

- Scheduling, retries, logging, and monitoring are handled by Airflow.

In effect:

- DCH acts as the design and governance layer

- Airflow acts as the execution engine

Low-code configuration in DCH becomes executable orchestration in a battle-tested runtime. No custom schedulers. No hidden cron logic. No fragile glue code.

Draft and Production DAGs, Cleanly Separated

Versioning also extends into execution.

Each workflow can produce:

- A published DAG for production runs

- A draft DAG for manual testing

Draft executions never interfere with production schedules. When a draft is published, the system automatically reconciles the DAGs cleanly, predictably, and without manual intervention.

Even edge cases are handled:

- Renaming a workflow updates its DAGs.

- Deactivating a workflow disables execution without deleting history.

This is the kind of operational hygiene enterprise teams expect.

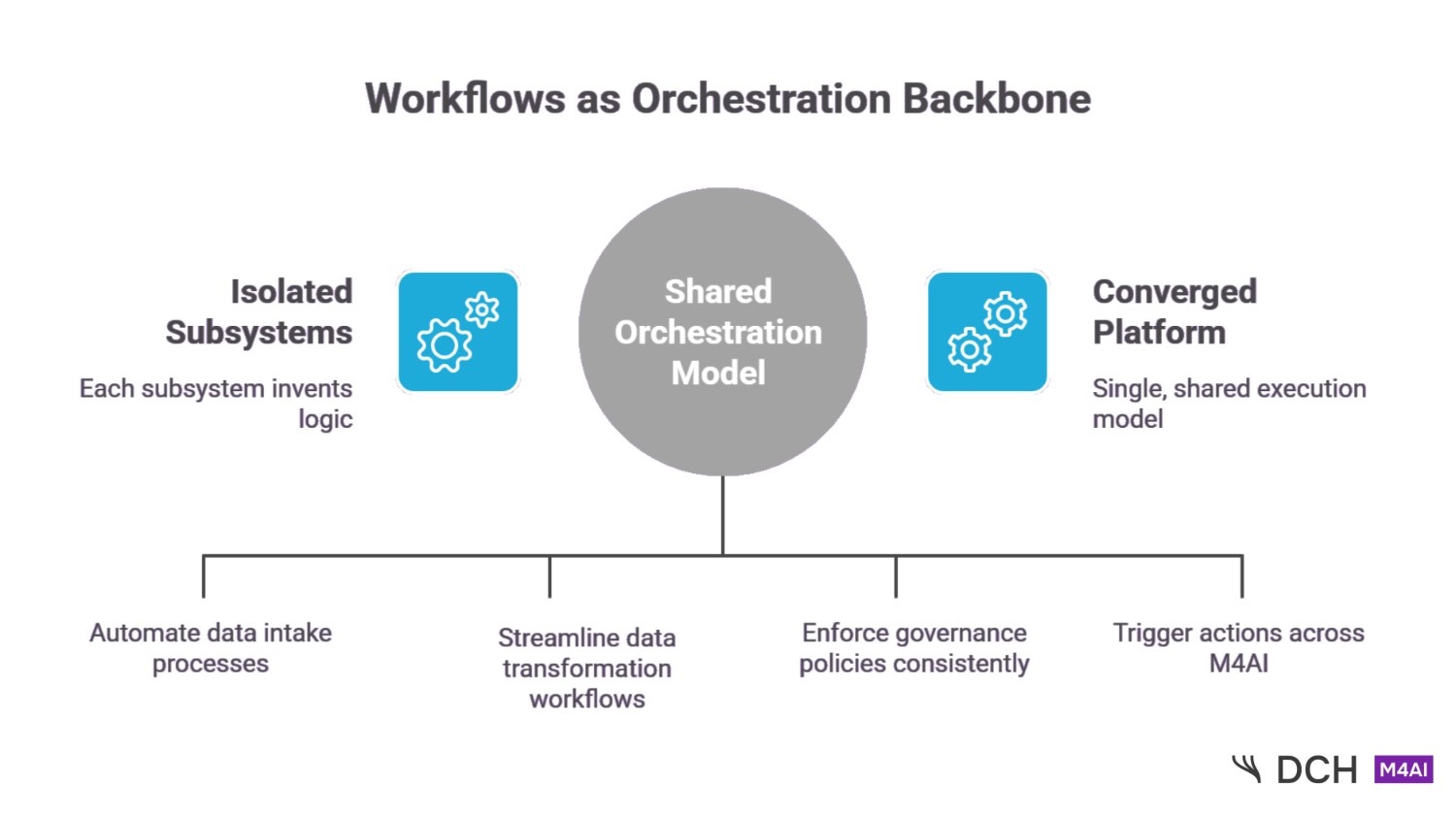

More Than Execution: A Shared Backbone

Workflows are not an isolated feature.

They are the orchestration backbone that future capabilities will build on:

- Intake automation

- Transformation pipelines

- Governance enforcement

- Agent-driven actions across M4AI

Instead of each subsystem inventing its own execution logic, the platform converges on a single, shared model.

One orchestration layer. Multiple execution contexts.

Why This Matters

Workflows mark a clear shift in DCH 3.0:

From configuration to execution.

From ad-hoc pipelines to governed automation.

From isolated jobs to a unified operational backbone.

They are not just a replacement for Load Plans. They are the foundation for how Context64AI systems will run reliably, transparently, and at enterprise scale.

Transform AI Potential into Business Reality

Book a live demo and see Context64.AI in action.

Context64.AI is a European technology company specializing in AI-powered data integration and contextual intelligence for complex engineering environments.